Part 2: Write IT down

In this part of the book, we are now going to focus on the second main idea of this book: Write IT down. We now need to acknowledge that our brains are fallible (and ageing) and thus we need to write down many safeguards to ensure that our analyses can be of high quality.

We cannot leave code quality, documentation and finally its reproducibility to chance. We need to write down everything we need to ensure the long-term reproducibility of our pipelines.

The reproducibility iceberg

I think it is time to reflect on why I bothered with the first part of the book at all, because for now, I didn’t really teach you anything directly related to reproducibility, so why didn’t I just jump straight to the reproducibility part?

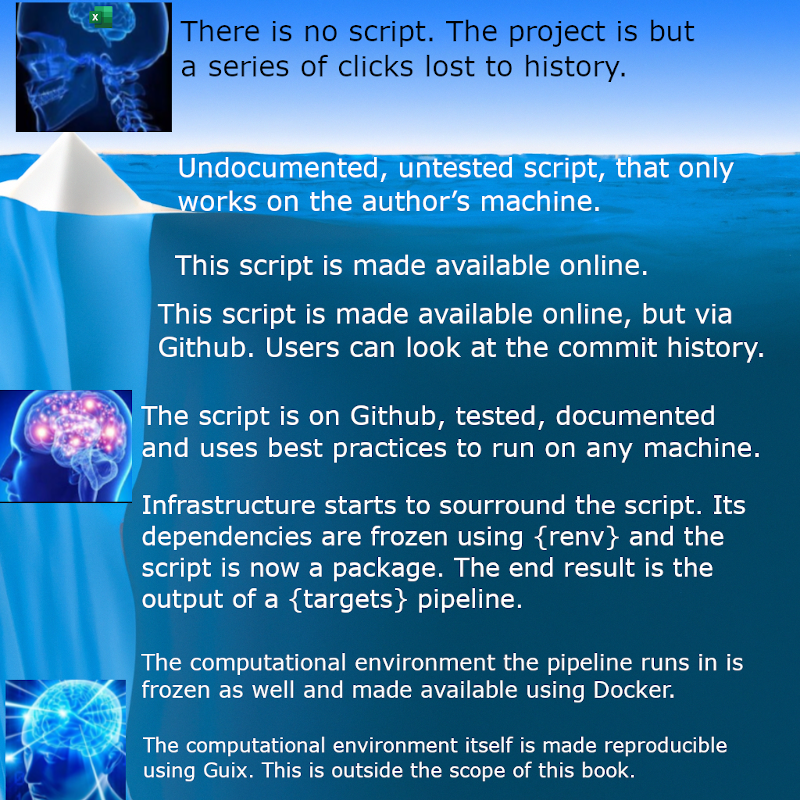

Remember the introduction, where I talked about the reproducibility continuum or spectrum? It is now time to discuss this in greater detail. I propose a new analogy, the reproducibility iceberg:

Why an iceberg? Because the parts of the iceberg that you see, those that are obvious, are like running your analyses in a click-based environment like Excel. This is what’s obvious, what’s easy. No special knowledge or even training is required. All that’s required is time, so people using these tools are not efficient and thus compensate by working insane hours (I can’t go home and enjoy time with my family I have to stay at the office and update the spreadsheeeeeeeeet clicks furiously).

Let’s go one level deeper: let’s write a script. This is where we started. Our script was not too bad, it did the job. Unlike a click-based workflow, we could at least re-read it, someone else could read it, and it would be possible to run in the future but likely with some effort unless we’re lucky. By that, I mean that for such a script to run successfully in the future, that script cannot rely on packages that got updated in such a way that the script cannot run anymore (for example, if functions get renamed, or if their arguments get renamed). Furthermore, if that script relies on a data source, the original authors also have to make sure that the same data source stays available. Another issue is collaborating when writing this script. Without any version control tools nor code hosting platform, collaborating on this script can very quickly turn into a nightmare.

This is where Git and Github.com came into play, one level deeper. The advantage now is that collaboration was streamlined. The commit history is available to all the teammates and it is possible to revert changes, experiment with new features using branches and overall manage the project. In this layer we also employ new programming paradigms to make the code of the project less verbose, using functional programming, with the added benefits of making it easier to test, document and share (which we will discuss to its fullest in this part of the book). Using literate programming, it is also much easier to go to our final output (which is usually a report). We implemented DRY ideas to the fullest to ensure that our code was of high quality.

At this depth, we are at a pivotal moment: in many cases, analysts may want to stop here because there is no more time or budget left. After all, the results were obtained and shared with higher-ups. It can be difficult, in some contexts, to justify spending more time to go deeper and write tests, documentation and otherwise ensure total reproducibility. So at this stage, we will see what we can do that is very cheap (in both time and effort) to ensure the minimal amount of reproducibility, which is recording packages versions. Recording packages means that the exact same versions of the packages that were used originally will get used regardless of when in the future we rerun the analysis.

But if budget and time allow we can still go deeper, and definitely should. One day, you will want to update your script to use the newest functionality of your preferred package, but with package version recording, you will be stuck in the past with a very old version and its dependencies. How will you know that upgrading this package will not break anything anywhere in your workflow? Also, we want to make running the script as easy as possible, and ideally, as non-interactively as possible. Indeed, any human interaction with the analysis is a source of errors. That’s why we need to thoroughly and systematically test our code. These tests also need to run non-interactively. Using the tools described in part two of this book, we can actually set up the project, right from the very beginning, in a way that it will be reproducible quite naturally. By using the right tools and setting things up right, we don’t really need to invest more time to make things reproducible. The project will simply be reproducible because it was engineered that way. And I insist, at practically no additional cost!

Another problem with only recording packages’s version is that in practice, it is very often not enough. This is because installing older versions of packages can be a challenge. This can be the case for two reasons:

- These older packages need also an older version of R, and installing old versions of R can be tricky, depending on your operating system;

- These older packages might need to get compiled and thus depend themselves on older versions of development libraries needed for compilation.

So to solve this issue, we will also need a way to freeze the computational environment itself, and this is where we will use Docker.

Finally, and this is the last level of the iceberg and not part of this book, is the need to make the building of the computational environment reproducible as well. Guix is the tool that enables one to do just that. However, this is a very deep topic unto itself, and there are workarounds to achieve this using Docker, so that’s why we will not be discussing Guix.

We will travel down the iceberg in the coming chapters. First, we will use what we’ve learned up until now to rewrite our project using functional and literate programming. Our project will not be two scripts anymore, but two Rmarkdown files that we can knit and that we can then read and also send to non-technical stakeholders.

Then, we are going to turn these two Rmds files into a package. This will be done by using Sébastien Rochette’s package {fusen}1. {fusen} makes it very easy to go from our Rmd files to a package, by using what Sébastien named the Rmarkdown first method. If at this stage it’s not clear why you would want to turn your analysis into a package, don’t worry, it’ll be once we’re done with this chapter.

Once we have a package, we can use {testthat} for unit testing, and base R functions for assertive programming. At this stage, our code should be well-documented, easy to share, and thoroughly tested.

I think I should emphasize the following: I started from very simple scripts, which is how most analyses are done. Then, using functional and literate programming, these scripts got turned into RMarkdown files, and in this part of the book these RMarkdown files will get turned into a package. It is important to understand the following point: I did this to illustrate how we can go from these simple scripts to something more robust, step by step. Of course, later, you can immediately start from either the RMarkdown files or from the package. My advice is to start from the package, because as you shall see, starting from the package is basically the same amount of effort as starting from a simple RMarkdown file, thanks to {fusen}, but now you have the added benefits of using package development facilities to improve your analysis.

Once you have the package, you can build a true pipeline using {targets}, an incredibly useful library for build automation (but if you prefer to keep it at simple RMarkdown files, you can also use {targets}, you don’t have to build a package).

Once we reached this stage, this is when we can finally start introducing reproducibility concretely. The reason it will take so long to actually make our pipeline reproducible is that we need solid foundations. There is no point in making a shaky analysis reproducible.

https://thinkr-open.github.io/fusen/↩︎